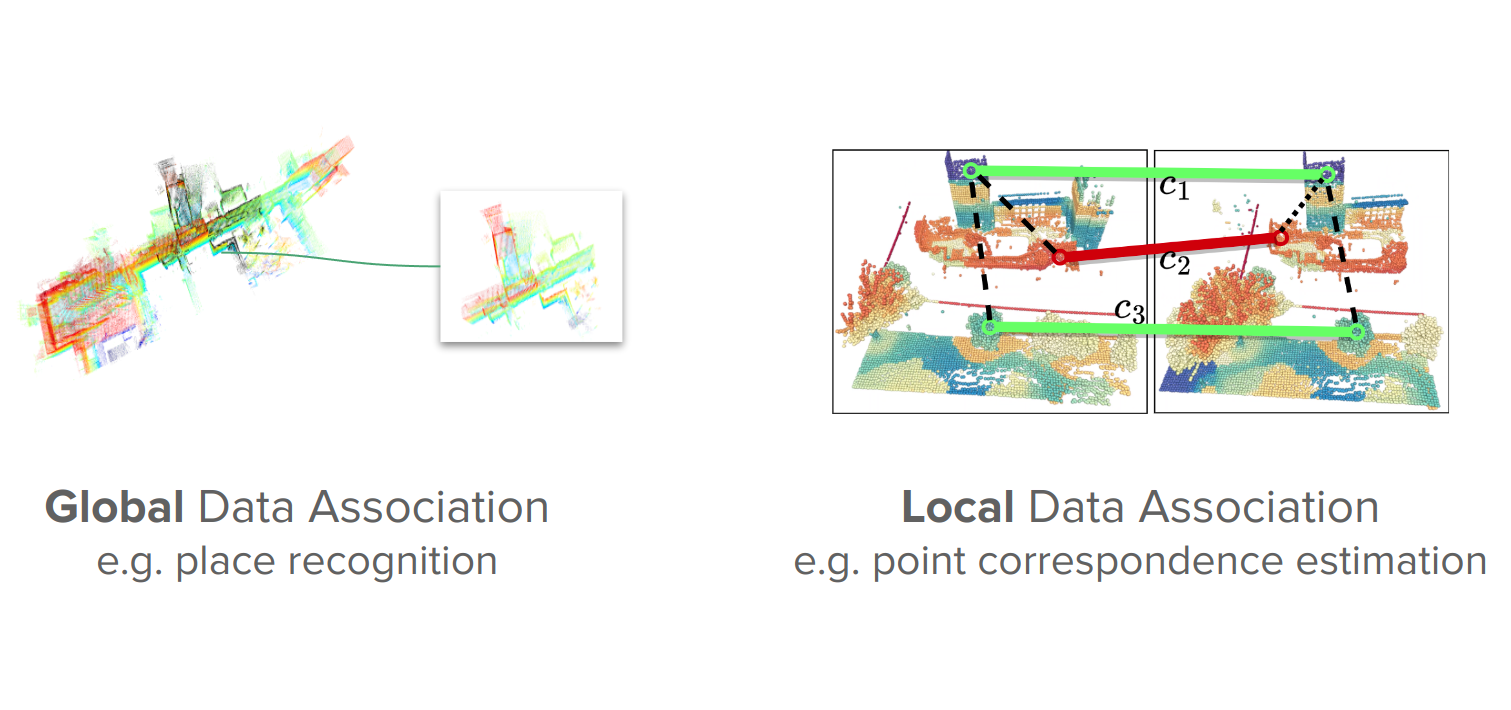

Data association remains a pivotal research area in the pursuit of enabling long-term autonomy - as autonomous agents need to construct and maintain an accurate representation of the environment in which they operate. In summary, data association (aka correspondence estimation) aims to identify similarities (across time) in the data observed by a robot through its various sensors. Although there has been vast research in this field, robust, general-purpose solutions to data association still need much fundamental research. In the context of 3D Light Detection and Ranging (LiDAR) based data association, this involves the analysis of unordered and sparse scene-level point cloud data, which has proven more challenging than the 2D image counterpart for many decades. Despite considerable progress in the field of 3D perception, existing methods for data association remain fragile and limited in applicability, with most works directly applying concepts from the field of 2D computer vision. It is evident that these methods do not directly transfer to scene-level point cloud data. 3D data captured in real-world environments contains rich geometric structure. This can be exploited to enforce the accuracy and robustness of 3D correspondence tasks. This thesis explores how incorporating priors regarding the existing scene geometry and the temporal consistency that’s present in the context of mobile robotics can be utilised to address these limitations. We demonstrate how incorporating such natural constraints provides generalizability and out-of-distribution robustness, which are vital properties for long-term autonomy. The effectiveness of these ideas will be demonstrated on tasks including place recognition, re-ranking, metric localization, 3D scene flow, and 4D point-wise trajectory estimation.

Queensland University of Technology. PhD Thesis by Publication. , 2024

Queensland University of Technology. PhD Thesis by Publication. , 2024

PAPER

PAPER